In recent years, voice interfaces have become increasingly popular due to their convenience and accessibility. Integrating voice capabilities into web applications can greatly enhance user experience, allowing users to interact with applications naturally using speech commands. React, a JavaScript library for building user interfaces, provides a powerful platform for developing applications with voice interface functionalities. In this article, we’ll explore how to integrate voice interfaces into React applications, along with practical examples and code snippets to guide you through the process.

Understanding Voice Interfaces:

Voice interfaces, also known as voice user interfaces (VUI), enable users to interact with computers and devices through spoken commands. These interfaces leverage speech recognition technology to interpret and process user input, allowing for hands-free interaction. Voice assistants like Amazon Alexa, Google Assistant, and Apple Siri have popularized voice interfaces by providing users with intuitive ways to perform tasks, access information, and control devices.

Benefits of Integrating Voice Interfaces with React

Enhanced Accessibility: VUIs empower users with visual impairments or physical limitations to navigate applications effortlessly.

Increased User Engagement: Voice interaction feels natural and intuitive, fostering a more engaging user experience.

Hands-Free Operation: VUIs enable interaction while users are performing other tasks, improving convenience and efficiency.

Broader Reach: Voice interfaces expand your application’s reach to voice-activated devices like smart speakers and wearables.

Integrating Voice Interfaces with React:

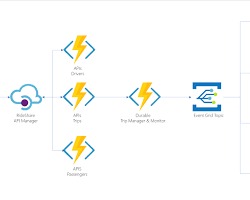

To integrate voice interfaces into React applications, we can leverage various libraries and APIs that provide speech recognition capabilities. One of the widely used libraries for this purpose is ‘react-speech-recognition’. This library simplifies the process of incorporating speech recognition functionality into React components.

Setting Up the Environment

Before diving into the implementation, let’s set up our development environment. Ensure you have Node.js and npm (Node Package Manager) installed on your system. Create a new React project using Create React App or any other preferred method.

npx create-react-app react-voice-interface

cd react-voice-interfaceNext,install the ‘react-speech-recognition’ library:

npm install react-speech-recognition

Now, let’s create a basic React component to demonstrate voice recognition:

// VoiceRecognition.js

import React from 'react';

import SpeechRecognition, { useSpeechRecognition } from 'react-speech-recognition';

const VoiceRecognition = () => {

const { transcript, resetTranscript } = useSpeechRecognition();

const handleListen = () => {

SpeechRecognition.startListening();

};

return (

<div>

<button onClick={handleListen}>Start Listening</button>

<p>Transcript: {transcript}</p>

<button onClick={resetTranscript}>Reset</button>

</div>

);

};

export default VoiceRecognition;In this component, we import ‘useSpeechRecognition’ hook from ‘react-speech-recognition’, which provides us with the transcript of the spoken words. We also import ‘SpeechRecognition’ for starting and stopping the listening process. The ‘handleListen’ function starts the speech recognition process when the button is clicked.

Now, let’s use this component in our main ’App.js’:

// App.js

import React from 'react';

import VoiceRecognition from './VoiceRecognition';

function App() {

return (

<div className="App">

<h1>Voice Interface Demo</h1>

<VoiceRecognition />

</div>

);

}

export default App;With this setup, when you run the application (‘npm start’), you’ll see a button labeled “Start Listening.” Clicking this button will activate the microphone, and you can start speaking. The transcript of your speech will be displayed below the button.

Enhancing Voice Interactions

While the basic setup provides a functional voice interface, we can enhance it further by adding commands and customizing responses based on user input. The ‘react-speech-recognition’ library allows us to define custom commands using the ‘commands’ option

Let’s extend our ‘VoiceRecognition’ component to recognize specific commands:

// VoiceRecognition.js

import React from 'react';

import SpeechRecognition, { useSpeechRecognition } from 'react-speech-recognition';

const VoiceRecognition = () => {

const { transcript, resetTranscript } = useSpeechRecognition({

commands: [

{

command: ['hello', 'hi'],

callback: () => alert('Hello! How can I assist you?'),

},

{

command: 'reset',

callback: () => resetTranscript(),

},

],

});

const handleListen = () => {

SpeechRecognition.startListening();

};

return (

<div>

<button onClick={handleListen}>Start Listening</button>

<p>Transcript: {transcript}</p>

<button onClick={resetTranscript}>Reset</button>

</div>

);

};

export default VoiceRecognition;In this updated version, we’ve defined two commands: “hello” and “hi,” which trigger an alert message, and “reset,” which resets the transcript. Now, when you say “hello” or “hi” while the microphone is active, you’ll see an alert message.

Best Practices for Voice Interfaces Development in React

- Clear and Concise Prompts: Guide users on how to interact with the VUI using natural language.

- Error Handling and Feedback: Provide informative messages for speech recognition errors and invalid user utterances.

- Visual Cues: Complement voice interaction with visual elements that reinforce user actions.

- Contextual Understanding: Analyze previous interactions and user behavior to improve VUI accuracy and adapt responses.

- Accessibility: Maintain keyboard accessibility alongside voice interaction for a truly

Conclusion

Integrating voice interfaces into React applications opens up a new realm of possibilities for enhancing user experience. By leveraging libraries like ‘react-speech-recognition’, developers can create intuitive and interactive applications that respond to spoken commands. As voice technology continues to evolve, incorporating voice interfaces into web applications will become increasingly common, offering users seamless and accessible ways to interact with technology. With the examples and concepts discussed in this article, you’re well-equipped to explore and implement voice interfaces in your React projects, empowering users with hands-free interaction capabilities.

Happy coding!